Measuring and Improving Software Quality with Roadie Backstage

By David Tuite • September 1st, 2022

We are building Tech Insights on top of Roadie Backstage. It will help you ensure that all of your software assets have the support and maintenance they need in order to keep you secure, compliant, productive, agile, and available.

Our customers will be able to use Tech Insights to spot unloved services in production, find teams who need more support in order to produce top quality software, and help engineering organizations understand where the bar is.

Roadie users will be able to create scorecards which define what it means to build quality software within their company. They will be able to apply these scorecards to software in the Backstage catalog. They will see reports to help identify software which needs improvement, and they will be empowered to work with owners to deliver said improvements.

Tech insights will be the first major proprietary Roadie feature. It is in development as I write this post, and we are already working with design partners on rollout.

Throughout our work with dozens of customers, it has become clear that implementing Backstage and cataloging software is only the first step on a longer journey for most organizations.

If you would like to supercharge your Backstage experience with this feature, please request a demo of Roadie.

Measuring the quality of software

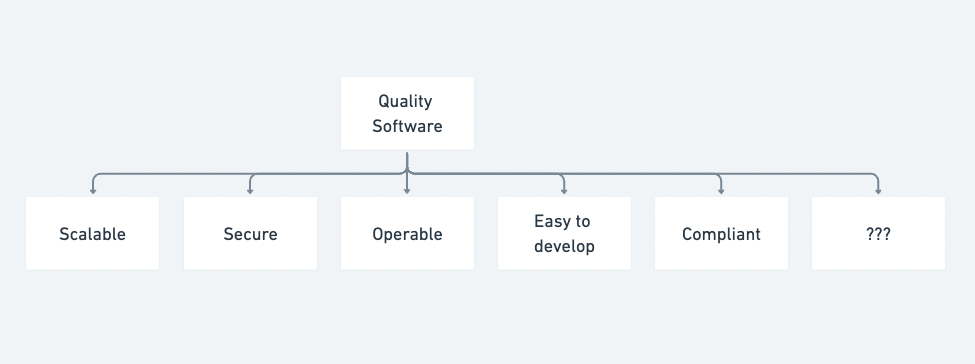

The first step towards determining whether or not software is of a high enough quality, is to first define what “quality” means in your organization.

Quality is an aggregate measure which accounts for many dimensions. Quality software is usually easy to operate in production, easy to develop, secure and compliant, amongst many other attributes.

Each of these individual measures is composed of its own factors, each of which is likely tracked in a different tool.

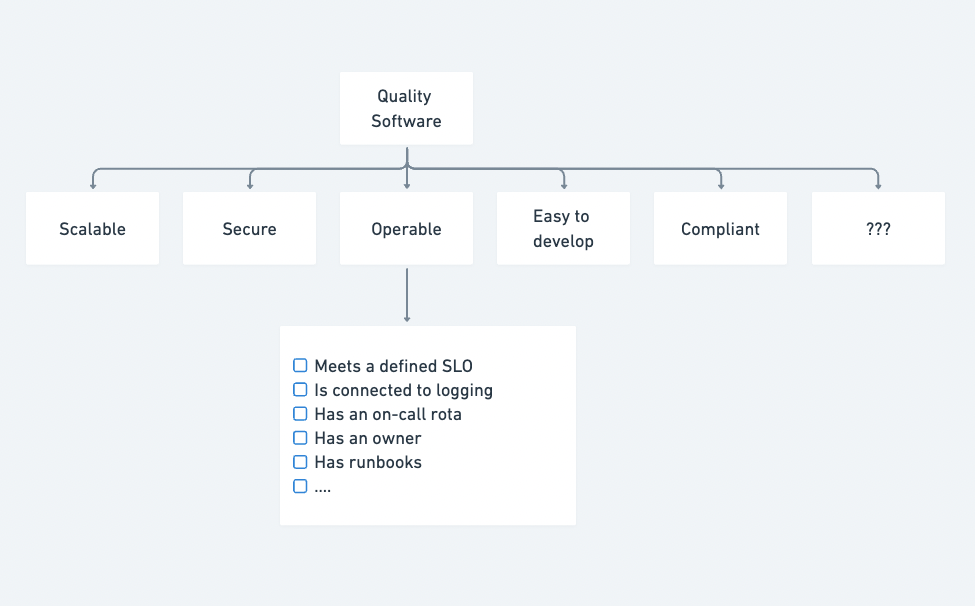

Here are some simple attributes you might check to get a measure of operability:

- Software which is easy to operate in production usually has adequate uptime. This might be measured against a service level objective recorded in Datadog.

- It is usually connected to a logging tool. This might be Datadog again, or it might be a self-hosted ELK stack.

- The software should have an on-call rota associated with it. This would be stored in Pagerduty.

- It should have an owner who is responsible for keeping the software up-to-date and deploying it frequently. This would be stored in the Backstage catalog.

- it should have runbooks defined. These might be in a Confluence document.

Once we factor in a few of these attributes, our measurement of good software starts to look more complex.

Run the same process for scalable, secure, easy to develop, compliant and any other top level factors and you quickly start to realise that you have to check 40+ factors and integrate with 20+ tools to determine if a piece of software is high quality or not.

Is there enough quality?

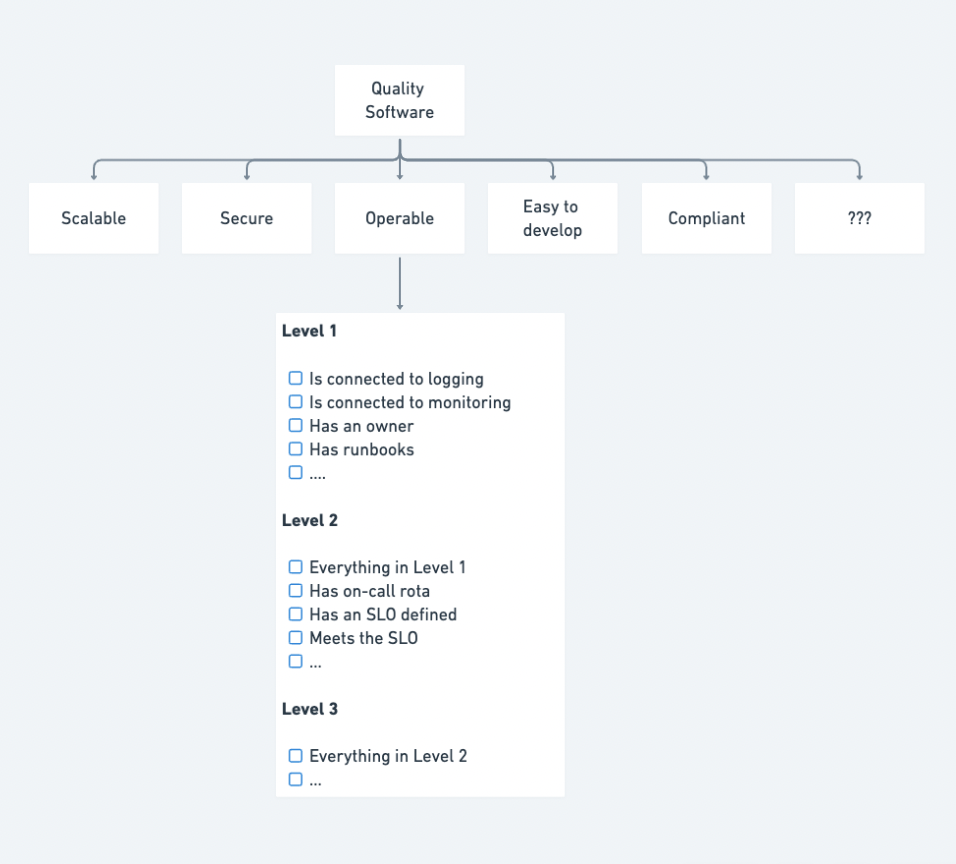

The next level of complexity comes from attempting to determine whether or not the current level of quality is “enough”.

The required level of quality will vary depending on the purpose and exposure of the software in question. Take uptime for example. Your payment processing service might need 5 nines of uptime, while some internal reporting tool might be down for 5 months before anyone notices, and that might be ok.

To account for this in the measurement, you will need to attach different expectations to different software components.

This is frequently done by categorizing and dividing software into tiers. Software in tier zero might be mission critical and require the highest levels of quality. Tier 3 might be far less important from an overall business standpoint, and will be subject to less stringent requirements.

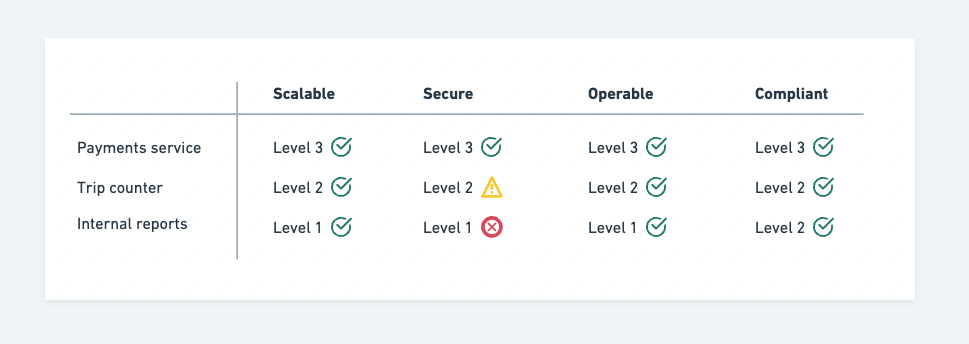

We can contextualize the measurements we defined above by defining quality levels, as shown in the diagram below.

Driving improvements in software quality

Invariably, when you define software excellence and compare the current state of the world against it, you will find gaps. This is a good thing. The next step should be to encourage the improvement of this software.

There can be a multitude of legitimate reasons why a piece of software doesn’t meet a given quality bar. Teams will constantly streamline their priorities to try to match those of the wider business, and quality will sometimes suffer.

The important thing is that this quality gap is visible. We may actively choose not to rectify it, but we should at least be able to spot it and have that conversation.

Teams should be able to independently make realizations like “everyone else in the org seems to have passed the security level 1 bar… perhaps we should think about doing some security work soon”.

We believe Roadie Backstage has a role to play by increasing visibility into organizational software quality. We should nudge people towards increasing software quality, in a thoughtful and conscientious way.

We’re working on Tech Insights at Roadie

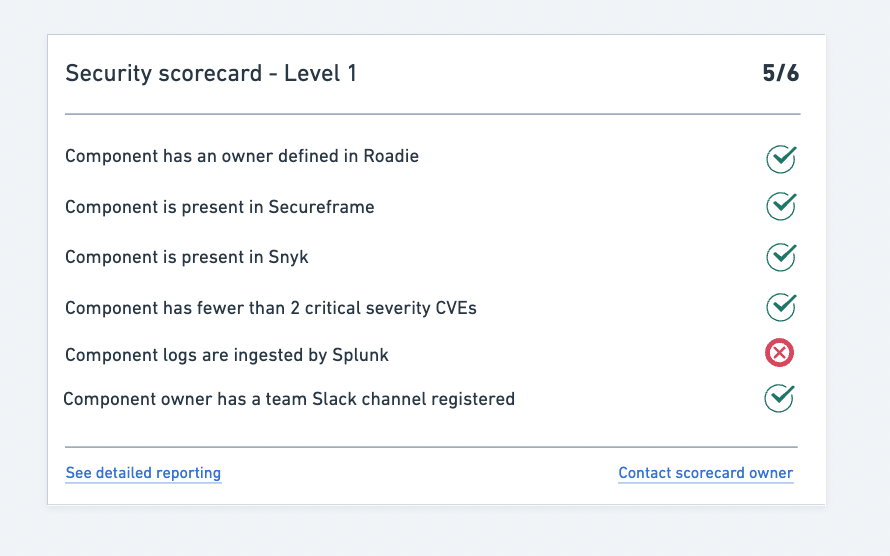

- You will be able to write Checks which continually test the software in your catalog in an automated way. These Checks can be anything from “Does the software have an SLO set in Datadog” to “Is the log4j version semantically greater than 2.16.0”. Checks can leverage custom data or data from the APIs of standard SaaS tools that you already use.

- You will be able to group Checks into Scorecards, and target those scorecards to subsets of the software in the catalog. Perhaps you want to target Tier 0 and Tier 1 Java services. Perhaps you want to target Python services in the data science org.

- You will be able to slice and dice reports of software quality so that you can find software which needs more support in order to meet the quality bar.

- Teams will be carefully and conscientiously nudged towards improving the quality of their software over time.

We’re already rolling out early versions of this software to our design partners. If you would like to learn more, or participate in the betas, please request a demo of Roadie Backstage today.